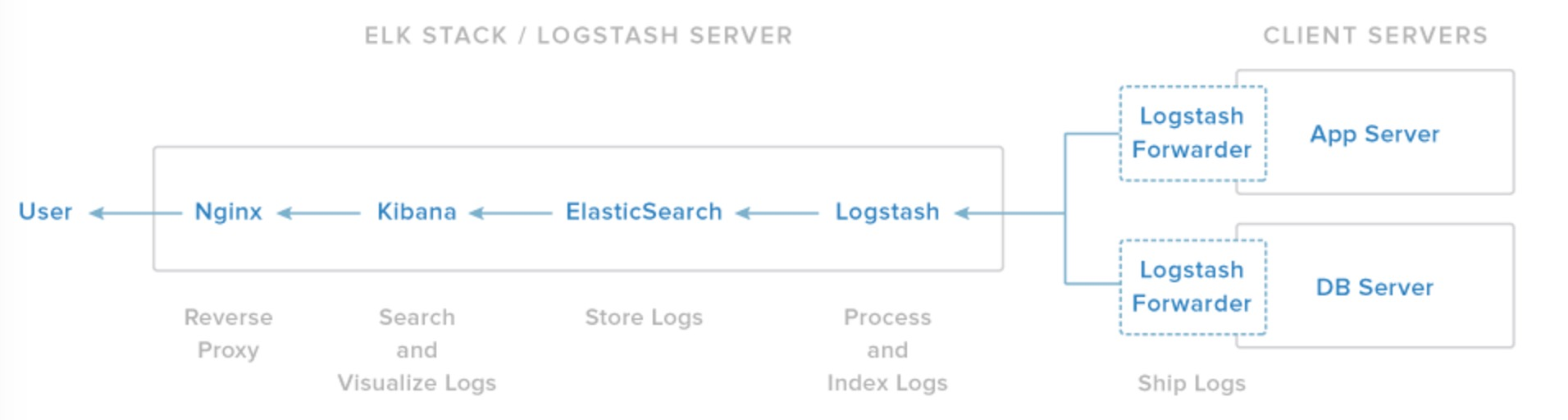

Logstash是一个开源的用于收集,分析和存储日志的工具。 Kibana4用来搜索和查看Logstash已索引的日志的web接口。这两个工具都基于Elasticsearch。 ● Logstash: Logstash服务的组件,用于处理传入的日志。 ● Elasticsearch: 存储所有日志 ● Kibana 4: 用于搜索和可视化的日志的Web界面,通过nginx反代 ● Logstash Forwarder: 安装在将要把日志发送到logstash的服务器上,作为日志转发的道理,通过 lumberjack 网络协议与 Logstash 服务通讯 注意:logstash-forwarder要被beats替代了,关注后续内容。后续会转到logstash+elasticsearch+beats上。

ELK架构如下:

elasticsearch-1.7.2.tar.gz kibana-4.1.2-linux-x64.tar.gz logstash-1.5.6-1.noarch.rpm logstash-forwarder-0.4.0-1.x86_64.rpm 单机模式 #OS CentOS release 6.5 (Final) #Base and JDK groupadd elk useradd -g elk elk passwd elk yum install vim lsof man wget ntpdate vixie-cron -y crontab -e */1 * * * * /usr/sbin/ntpdate time.windows.com > /dev/null 2>&1 service crond restart 禁用selinux,关闭iptables sed -i "s#SELINUX=enforcing#SELINUX=disabled#" /etc/selinux/config service iptables stop reboot tar -zxvf jdk-8u92-linux-x64.tar.gz -C /usr/local/ vim /etc/profile export JAVA_HOME=/usr/local/jdk1.8.0_92 export JRE_HOME=/usr/local/jdk1.8.0_92/jre export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH export CLASSPATH=$CLASSPATH:.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib source /etc/profile #Elasticsearch #(cluster时在其他server安装elasticsearch,并配置相同集群名称,不同节点名称即可) RPM安装 rpm --import //packages.elastic.co/GPG-KEY-elasticsearch wget -c //download.elastic.co/elasticsearch/elasticsearch/elasticsearch-1.7.2.noarch.rpm rpm -ivh elasticsearch-1.7.2.noarch.rpm tar安装 wget -c //download.elastic.co/elasticsearch/elasticsearch/elasticsearch-1.7.2.tar.gz tar zxvf elasticsearch-1.7.2.tar.gz -C /usr/local/ cd /usr/local/elasticsearch-1.7.2/ mkdir -p /data/{db,logs} vim config/elasticsearch.yml #cluster.name: elasticsearch #node.name: "es-node1" #node.master: true #node.data: true path.data: /data/db path.logs: /data/logs network.host: 192.168.28.131 #插件安装 cd /usr/local/elasticsearch-1.7.2/ bin/plugin -install mobz/elasticsearch-head #//github.com/mobz/elasticsearch-head bin/plugin -install lukas-vlcek/bigdesk bin/plugin install lmenezes/elasticsearch-kopf #会提示版本过低 解决办法就是手动下载该软件,不通过插件安装... cd /usr/local/elasticsearch-1.7.2/plugins wget //github.com/lmenezes/elasticsearch-kopf/archive/master.zip unzip master.zip mv elasticsearch-kopf-master kopf 以上操作就完全等价于插件的安装 cd /usr/local/ chown elk:elk elasticsearch-1.7.2/ -R chown elk:elk /data/* -R supervisord安装: yum install supervisor -y 末尾添加针对elasticsearch的配置项 vim /etc/supervisord.conf [program:elasticsearch] directory = /usr/local/elasticsearch-1.7.2/ ;command = su -c "/usr/local/elasticsearch-1.7.2/bin/elasticsearch" elk command =/usr/local/elasticsearch-1.7.2/bin/elasticsearch numprocs = 1 autostart = true startsecs = 5 autorestart = true startretries = 3 user = elk ;stdout_logfile_maxbytes = 200MB ;stdout_logfile_backups = 20 ;stdout_logfile = /var/log/pvs_elasticsearch_stdout.log #Kibana(注意版本搭配) //download.elastic.co/kibana/kibana/kibana-4.1.2-linux-x64.tar.gz tar zxvf kibana-4.1.2-linux-x64.tar.gz -C /usr/local/ cd /usr/local/kibana-4.1.2-linux-x64 vim config/kibana.yml port: 5601 host: "192.168.28.131" elasticsearch_url: "//192.168.28.131:9200" ./bin/kibana -l /var/log/kibana.log #启动服务,kibana 4.0开始是以socket服务启动的 #cd /etc/init.d && curl -o kibana //gist.githubusercontent.com/thisismitch/8b15ac909aed214ad04a/raw/fc5025c3fc499ad8262aff34ba7fde8c87ead7c0/kibana-4.x-init #cd /etc/default && curl -o kibana //gist.githubusercontent.com/thisismitch/8b15ac909aed214ad04a/raw/fc5025c3fc499ad8262aff34ba7fde8c87ead7c0/kibana-4.x-default #修改对应信息,添加可执行权限 或者如下: cat >> /etc/init.d/kibana <"$KIBANA_LOG" 2>&1 & sleep 2 pidofproc node > $PID_FILE RETVAL=$? [[ $? -eq 0 ]] && success || failure echo [ $RETVAL = 0 ] && touch $LOCK_FILE return $RETVAL fi } reload() { echo "Reload command is not implemented for this service." return $RETVAL } stop() { echo -n "Stopping $DESC : " killproc -p $PID_FILE $DAEMON RETVAL=$? echo [ $RETVAL = 0 ] && rm -f $PID_FILE $LOCK_FILE } case "$1" in start) start ;; stop) stop ;; status) status -p $PID_FILE $DAEMON RETVAL=$? ;; restart) stop start ;; reload) reload ;; *) # Invalid Arguments, print the following message. echo "Usage: $0 {start|stop|status|restart}" >&2 exit 2 ;; esac EOF chmod +x kibana mv kibana /etc/init.d/ #Nginx yum install nginx -y vim /etc/nginx/conf.d/elk.conf server { server_name elk.sudo.com; auth_basic "Restricted Access"; auth_basic_user_file passwd; location / { proxy_pass //192.168.28.131:5601; proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection 'upgrade'; proxy_set_header Host $host; proxy_cache_bypass $http_upgrade; } } #htpsswd添加:yum install httpd-tools –y echo -n 'sudo:' >> /etc/nginx/passwd #添加用户 openssl passwd elk.sudo.com >> /etc/nginx/passwd #添加密码 cat /etc/nginx/passwd #查看 chkconfig nginx on && service nginx start #Logstash--Setup rpm --import //packages.elasticsearch.org/GPG-KEY-elasticsearch vi /etc/yum.repos.d/logstash.repo [logstash-1.5] name=Logstash repository for 1.5.x packages baseurl=//packages.elasticsearch.org/logstash/1.5/centos gpgcheck=1 gpgkey=//packages.elasticsearch.org/GPG-KEY-elasticsearch enabled=1 yum install logstash -y #创建SSL证书(在logstash服务器上生成ssl证书。创建ssl证书有两种方式,一种指定IP地址,一种指定fqdn(dns)),选其一即可 #1、IP地址 在[ v3_ca ]配置段下设置上面的参数。192.168.28.131是logstash服务端的地址。 vi /etc/pki/tls/openssl.cnf subjectAltName = IP: 192.168.28.131 cd /etc/pki/tls openssl req -config /etc/pki/tls/openssl.cnf -x509 -days 3650 -batch -nodes -newkey rsa:2048 -keyout private/logstash-forwarder.key -out certs/logstash-forwarder.crt #注意将-days设置大点,以免证书过期。 #2、fqdn # 不需要修改openssl.cnf文件。 cd /etc/pki/tls openssl req -subj '/CN=logstash.sudo.com/' -x509 -days 3650 -batch -nodes -newkey rsa:2048 -keyout private/logstash-forwarder.key -out certs/logstash-forwarder.crt logstash.sudo.com是我自己测试的域名,所以无需添加logstash.sudo.com的A记录 #Logstash-Config #添加GeoIP数据源 #wget //geolite.maxmind.com/download/geoip/database/GeoLiteCity.dat.gz #gzip -d GeoLiteCity.dat.gz && mv GeoLiteCity.dat /etc/logstash/. logstash配置文件是以json格式设置参数的,配置文件位于/etc/logstash/conf.d目录下,配置包括三个部分:输入端,过滤器和输出。 首先,创建一个01-lumberjack-input.conf文件,设置lumberjack输入,Logstash-Forwarder使用的协议。 vi /etc/logstash/conf.d/01-lumberjack-input.conf input { lumberjack { port => 5043 type => "logs" ssl_certificate => "/etc/pki/tls/certs/logstash-forwarder.crt" ssl_key => "/etc/pki/tls/private/logstash-forwarder.key" } } 再来创建一个11-nginx.conf用于过滤nginx日志 vi /etc/logstash/conf.d/11-nginx.conf filter { if [type] == "nginx" { grok { match => { "message" => "%{IPORHOST:clientip} - %{NOTSPACE:remote_user} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:method} %{NOTSPACE:request}(?: %{URIPROTO:proto}/%{NUMBER:httpversion})?|%{DATA:rawrequest})\" %{NUMBER:status} (?:%{NUMBER:size}|-) %{QS:referrer} %{QS:a gent} %{QS:xforwardedfor}" } add_field => [ "received_at", "%{@timestamp}" ] add_field => [ "received_from", "%{host}" ] } date { match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ] } # geoip { # source => "clientip" # add_tag => [ "geoip" ] # fields => ["country_name", "country_code2","region_name", "city_name", "real_region_name", "latitude", "longitude"] # remove_field => [ "[geoip][longitude]", "[geoip][latitude]" ] # } } } 这个过滤器会寻找被标记为“nginx”类型(Logstash-forwarder定义的)的日志,尝试使用“grok”来分析传入的nginx日志,使之结构化和可查询。type要与logstash-forwarder相匹配。 同时,要注意nginx日志格式设置,我这里采用默认log_format。 #负载均衡反向代理时可修改为如下格式: log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $upstream_response_time $request_time $body_bytes_sent ' '"$http_referer" "$http_user_agent" "$http_x_forwarded_for" "$request_body" ' '$scheme $upstream_addr'; 日志格式不对,grok匹配规则要重写。 可以通过//grokdebug.herokuapp.com/在线工具进行调试。多数情况下ELK没数据的错误在此处。 #Grok Debug -- //grokdebug.herokuapp.com/ grok 匹配日志不成功,不要往下看测试。之道匹配成功对为止。可参考ttp://grokdebug.herokuapp.com/patterns# grok匹配模式,对后面写规则匹配很受益的。 最后,创建一文件,来定义输出。 vi /etc/logstash/conf.d/99-lumberjack-output.conf output { if "_grokparsefailure" in [tags] { file { path => "/var/log/logstash/grokparsefailure-%{type}-%{+YYYY.MM.dd}.log" } } elasticsearch { host => "192.168.28.131" protocol => "http" index => "logstash-%{type}-%{+YYYY.MM.dd}" document_type => "%{type}" workers => 5 template_overwrite => true } #stdout { codec =>rubydebug } } 定义结构化的日志存储到elasticsearch,对于不匹配grok的日志写入到文件。注意,后面添加的过滤器文件名要位于01-99之间。因为logstash配置文件有顺序的。 在调试时候,先不将日志存入到elasticsearch,而是标准输出,以便排错。同时,多看看日志,很多错误在日志里有体现,也容易定位错误在哪。 在启动logstash服务之前,最好先进行配置文件检测,如下: # /opt/logstash/bin/logstash --configtest -f /etc/logstash/conf.d/* Configuration OK 也可指定文件名检测,直到OK才行。不然,logstash服务器起不起来。最后,就是启动logstash服务了。 #logstash-forwarder 需要将在安装logstash时候创建的ssl证书的公钥logstash.crt拷贝到每台logstash-forwarder服务器(需监控日志的server) wget //download.elastic.co/logstash-forwarder/binaries/logstash-forwarder-0.4.0-1.x86_64.rpm rpm -ivh logstash-forwarder-0.4.0-1.x86_64.rpm vi /etc/logstash-forwarder.conf { "network": { "servers": [ "192.168.28.131:5043" ], "ssl ca": "/etc/pki/tls/certs/logstash-forwarder.crt", "timeout": 30 }, "files": [ { "paths": [ "/var/log/nginx/*-access.log" ], "fields": { "type": "nginx" } } ] } 配置文件是json格式,格式不对logstash-forwarder服务是启动不起来的。 后面就是启动logstash-forwarder服务了 echo -e "192.168.28.131 Test1\n192.168.28.130 Test2\n192.168.28.138 Test3">>/etc/hosts #不添加elasticsearch启动会报错(无法识别Test*) su - elk cd /usr/local/elasticsearch-1.7.2 nohup ./bin/elasticsearch & (可以通过supervisord进行管理,与其他服务一同开机启动) elk: service logstash restart service kibana restart 访问//elk.sudo.com:9200/查询启动是否成功 client: service nginx start && service logstash-forwarder start #使用redis存储日志(队列),创建对应的配置文件 vi /etc/logstash/conf.d/redis-input.conf input { lumberjack { port => 5043 type => "logs" ssl_certificate => "/etc/pki/tls/certs/logstash-forwarder.crt" ssl_key => "/etc/pki/tls/private/logstash-forwarder.key" } } filter { if [type] == "nginx" { grok { match => { "message" => "%{IPORHOST:clientip} - %{NOTSPACE:remote_user} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:method} %{NOTSPACE:request}(?: %{URIPROTO:proto}/%{NUMBER:httpversion})?|%{DATA:rawrequest})\" %{NUMBER:status} (?:%{NUMBER:size}|-) %{QS:referrer} %{QS:a gent} %{QS:xforwardedfor}" } add_field => [ "received_at", "%{@timestamp}" ] add_field => [ "received_from", "%{host}" ] } date { match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ] } #test } } output { ####将接收的日志放入redis消息队列#### redis { host => "127.0.0.1" port => 6379 data_type => "list" key => "logstash:redis" } } vi /etc/logstash/conf.d/redis-output.conf input { # 读取redis redis { data_type => "list" key => "logstash:redis" host => "192.168.28.131" #redis-server port => 6379 #threads => 5 } } output { elasticsearch { host => "192.168.28.131" protocol => "http" index => "logstash-%{type}-%{+YYYY.MM.dd}" document_type => "%{type}" workers => 36 template_overwrite => true } #stdout { codec =>rubydebug } } # /opt/logstash/bin/logstash --configtest -f /etc/logstash/conf.d/* Configuration OK 登录redis查询,可以看到日志的对应键值信息已经写入

原文来自:

本文地址://gulass.cn/elk.html编辑:何云艳,审核员:逄增宝

本文原创地址://gulass.cn/elk.html编辑:向云艳,审核员:暂无